* In modern times, few seriously doubt that the brain is the seat of human thought and intelligence. There is still much to be learned about the brain, but we understand its general architecture, and the operation of the tens of billions of neurons, nerve cells, that make it up. The human mind is a product of the operation of the brain, though some suggest that a direct correlation of the mind with the activity of neurons is at least incomplete. There are a few who even go so far as to say the mind is beyond comprehension -- but in reality, nobody can identify any real obstacle to its investigation.

* If the mind is the brain in run-time, aware of the world and itself, any scientific consideration of the workings of the mind is necessarily predicated on an understanding of the workings of the brain. Taking this pragmatic approach requires outlining the structure of the human brain.

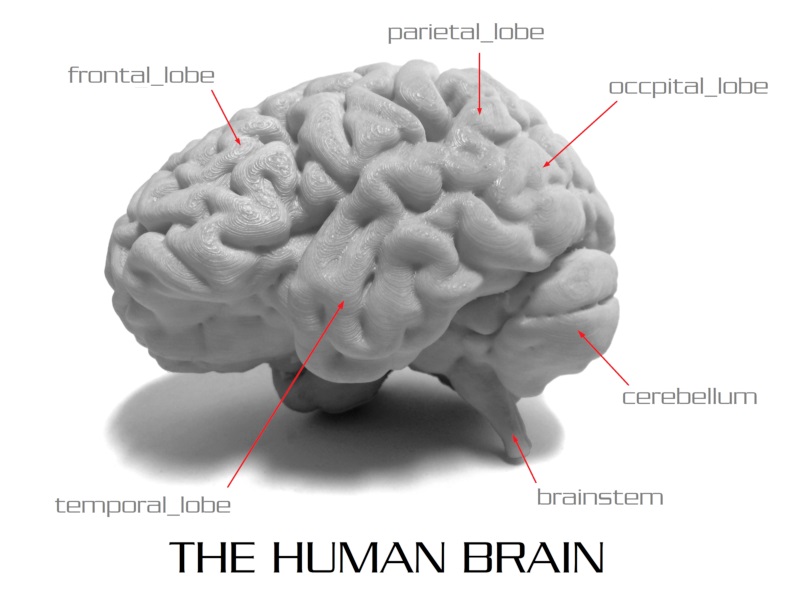

The brain is an elaborate organ, but it can be divided into three main components:

Brain functions have been mapped to particular elements of the brain by scanning the brain while specific activities are being performed, with the scan showing what elements are activated for each activity. Subjects with damage with specific parts of the brain also have provided insights, though ones we would prefer to have obtained under less unfortunate circumstances.

It should be noted that, while it is possible to define classes of functions for the various elements of the brain, the brain does not feature the neat modularization typical of human-designed machines. Any activity of the brain may well involve multiple elements, and though we may identify a particular region of the brain as a locus of a function, the elements supporting that function may not be restricted to that region.

There also may be a degree of variation in architecture and function from human to human. Children who suffer selective brain damage may well not exhibit any visible problems when they grow up, the brain having rearranged itself to compensate; adults with the same damage may never be able to compensate.

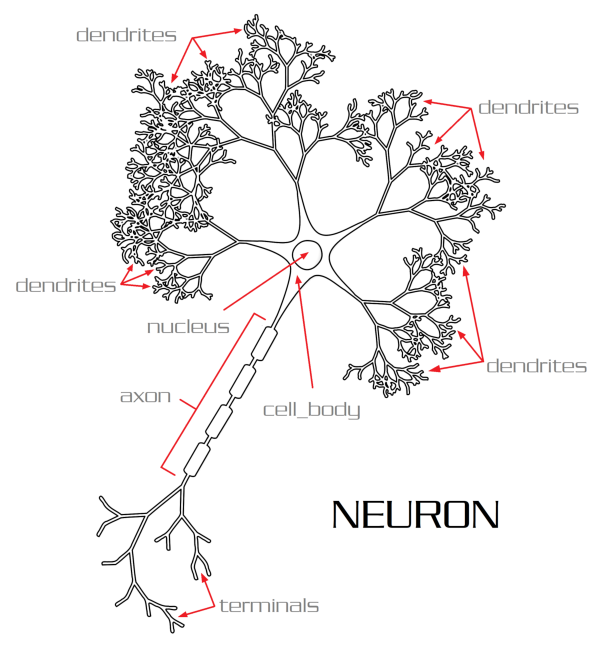

* The brain is effectively a mass of nerve cells or "neurons". Neurons are long, slender cells, with a "tree" of many branching threadlike "dendrites" on the input connecting to a cell body, with a long "axon" snaking out from the cell body to disperse, at its end, into many branching "terminals". The terminals of one neuron bridge to the dendrites of others via gaps known as "synapses", separated by a "synaptic cleft" -- a very narrow gap, about 20 nanometers wide. A terminal releases "neurotransmitter" biomolecules from its end that temporarily hook up with "receptors" on the end of the dendrite. Some neurotransmitters will activate or "excite" dendrites, while others will damp them down or "inhibit" them.

When a sufficient number of dendrites are activated, a neuron "fires", performing rapid changes in concentrations of ions between the interior and exterior of the neuron via pores called "ion channels", the result being that an electrical signal, or "action potential" or "nerve impulse", travels up the axon, to activate the terminals driving the dendrites of other axons. Activity over a given synaptic connection is generally adaptive, making it more or less likely that a dendrite will be activated in the future, providing a tiny element of memory.

This description is of a stereotypical or "canonical" neuron, but they come in a wide range of configurations. Instead of connections from axon to dendrite, there may be connections from axon to cell body; from axon to axon; or from dendrite to dendrite; or even backwards, from dendrite to axon. Synaptic connections may not have memory capabilities. There are also "electrical" synapses, with synaptic clefts an order of magnitude shorter than those of the "chemical" synapses described above, permitting direct ion exchange across the cleft. They can be seen as "hardwired" connections; electrical synapses work faster and more reliably than chemical synapses.

There are roughly a hundred known neurotransmitter types. No one neurotransmitter is exclusively linked to a single brain function; one neurotransmitter is linked to several functions, while each function is linked to several neurotransmitters. Neurotransmitters are organized into various "systems", with a -- not necessarily exclusive -- set of neurotransmitters associated with each system, and each system having a range of effects. Some neurotransmitters have a range of physiological effects beyond their neurological effects. The two most prevalent neurotransmitters are:

Other significant neurotransmitters include:

In some cases, oral supplements of neurotransmitters may be taken as a therapeutic; melatonin supplements, for example, can be used to improve sleep patterns. Psychoactive drugs alter neurotransmitter activity to obtain their effects. For example:

Neurotransmitters and psychoactive drugs are a big and complicated subject unto themselves. In any case, while there are features common among neurons, there is no "universal" neuron configuration. Even two neurons that have similar general structures may have subtle differences, for example in the receptors in the dendrites, or in the ion channels. Again, evolution does not produce the tidy component modularization seen in human-made machines. It should also be noted that the brain contains a large number of "glial" cells that are smaller but more numerous than neurons; they provide a range of support functions, such as insulating neurons from each other, and ensuring blood flow to keep neurons working.

The brain's neurons form an intertwined "neural network". It's huge -- current estimates give the average human brain as made up of 86 billion neurons, and given that each may have thousands of synapses, that means hundreds of trillions of synapses. It is this huge number of neurons that gives the human brain its extraordinary processing capabilities; a neuron can only fire from 5 to 50 times a second, making it much slower than an electronic equivalent, and so it's only the "massive parallelism" of the brain that allows it to accomplish its feats in real time.

* While we have general ideas of the overall organization of the brain, and a good understanding of the operation of neurons, we have a poor understanding of how to bridge from HERE -- the operation of individual neurons -- to THERE -- the overall operation of the brain.

Cognitive pragmatists have no real doubt that it all renders down to "plain old neurons (PON)", since nobody can point to any other tangible element in the brain that could support cognition. What else is there that we can actually see? However, the PONs theory was not always obvious; indeed, it wasn't until the work of the pioneering biomedical researcher Santiago Ramon y Cajal (1852:1934) that the "neuron doctrine", as it was called, became established, with the nervous system seen as made up of cells, the neurons; it was previously thought to be a contiguous net. He shared the 1906 Nobel Prize for medicine for his discovery. He had an artistic streak, with his tidy drawings of neural structures and the like regarded as classics.

Following Ramon y Cajal, in 1943 the neurophysiologist Warren S. McCulloch (1898:1969) and the logician Walter Pitts (1923:1969) published a paper titled "A Logical Calculus Of The Ideas Immanent In Nervous Activity" -- which outlined the function of the neuron as a "logic element", and described the architecture of artificial neurons. Their work was generally theoretical; the true pioneer of the view of the brain as an architecture based on neurons was Canadian psychologist Donald O. Hebb (1904:1985), who sketched out the idea in his 1949 book THE ORGANIZATION OF BEHAVIOR. As Hebb put it in that document:

QUOTE:

When an axon of cell A is near enough to excite cell B and repeatedly or persistently takes part in firing it, some growth process or metabolic change takes place in one or both cells such that A's efficiency, as one of the cells firing B, is increased.

END_QUOTE

This has become known as "Hebb's Law", describing "Hebbian learning", the law being sometimes expressed as: "Neurons that fire together wire together." Hebb saw the brain's functions as being performed by groups of neurons, what he called "cell assemblies". With the benefit of hindsight, Hebb's insights seem almost obvious.

Not at all incidentally, neurons are known to be "noisy"; any one neuron may fire below its average threshold, or it may not fire when it's well above its average threshold. Most of the time, noisy neurons don't amount to anything in particular, their action disappearing into the brain neural network -- but sometimes they do have a conscious and even significant effect. For example, sometimes we spontaneously recall, for no apparent reason, long-lost memories; typically these ancient memories are mere curiosities, quickly forgotten again, but on occasion we obtain important insights from them.

The "noisiness" of the brain was underlined by research performed by Hebb at McGill University in the early 1950s, in which paid test subjects were placed in a small room featuring little more than a bed, wearing blacked-out goggles and ear protectors, with a speaker feeding white noise into the room. They also wore gloves and cardboard tubes over the arms to damp out perceptions from touch.

Hebb had hoped subjects would go for several weeks, but not many made it for more than a few days, none more than a week. As the test wore on, they tended to suffer from vivid hallucinations. Their hallucinations would start out as points of light, lines or shapes, to become more elaborate -- seeing, for example, a procession of squirrels marching with sacks over their shoulders, or of eyeglasses marching down a street. The test subjects had auditory and tactile illusions as well, hearing a music box or choir, feeling an electric shock when touching an object.

In effect, neural noise provided cues that were amplified by the brain, there being no competition to damp out the noise effects. Subjects went into the experiment believing they would be able to think about things of importance undisturbed -- but in reality, their concentration deteriorated, with tests performed on subjects after they left the isolation room demonstrating that they suffered from significant, if temporary, cognitive impairment. Some reported giddiness, as if the world around them were in motion, or reported that objects constantly changed shape and size, until they were able to re-adjust to the real world. The noisiness of the brain isn't just an amusing fact; as discussed later, it is central to its operation.

BACK_TO_TOP* The primary tools for probing the brain are "electro-encephalography" -- blessedly abbreviated as "EEG" -- and "functional magnetic resonance imaging (fMRI)." EEG is typically conducted via a set of electrodes placed in the scalp, typically 21; there are high-density caps or nets that may have 256 electrodes. Voltages are typically measured as the difference between two electrodes, though they may alternatively be measured as the difference between the voltage on one electrode, and the average of the voltages from all the others.

EEG monitors the large-scale electrical activity of the brain, with limited resolution, defined by the electrode pairings, in localizing activity to any particular part of the brain -- and then only able to track what's going on at the surface of the brain. An EEG stereotypically reveals rhythmic wave activity in the brain, which has been categorized into a set of bands, corresponding to general levels of brain activity:

There is also a "mu" signal from 8 to 13 Hz, overlapping alpha, and corresponding to the inactive state of motor neurons. In addition, there are transient signals -- for example, momentary disruptions, sometimes associated with epileptics, and more normally signals associated with conscious realization, of which a bit more is said later.

* Although conventional EEG can only observe electrical activity on the surface of the brain, it can observe activity inside the brain via surgically implanted electrodes. Implanted electrodes are, for example, used to locate brain regions associated with epilepsy. In neuropsychological studies, implanted electrodes have been used to map brain activity versus particular stimuli, in some cases with a surprising degree of selectivity: in one subject, the only time the electrode registered activity was when that subject was shown a picture of the Sydney Opera House, or read the phrase "Sydney Opera House".

Implanted electrodes can also stimulate the brain, which is the basis for a set of therapeutic schemes known as "deep brain stimulation (DBS)". It is now widely used to correct Parkinson's disease, and other afflictions such as obsessive-compulsive disorder. Selective stimulation of the brain has a long history of use in neuropsychological research, going back to the work of the American-Canadian neurosurgeon Wilder Penfield (1891:1976) from the 1940s.

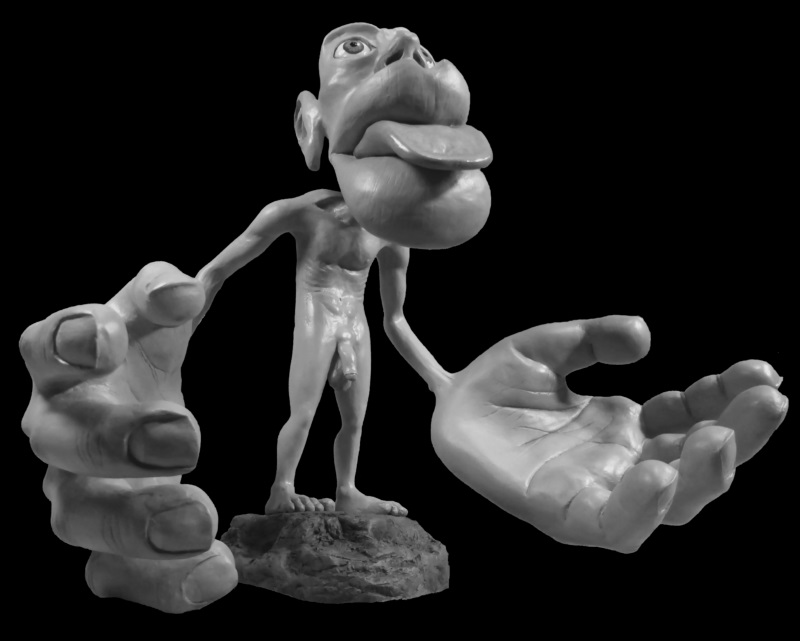

Penfield performed neural probes of epileptics, who had only been given a local anesthetic to allow opening up the skull; the brain itself does not feel pain, so patients could report on their experiences while he probed inside. Using this approach, Penfield was able to generate maps of the sensory and motor-control regions of the cerebral cortex. His mapping of elements of the body to their respective controlling regions in the brain, defining what is called a "cortical homunculus", is still used today, though modern research suggests that it needs to be updated.

In the course of his probes, Penfield also discovered that he could generate long-forgotten, vivid memories in subjects. This part of his work has led to the wild notion that nothing in our experience is ever forgotten, that the brain is continually soaking up experiences, with the memory waiting to be retrieved when given the proper stimulation.

There is nothing in his work that honestly suggests that; over time, memories are known to fade or become overlaid and scrambled -- with only a few of the memories, those that stuck for one reason or another, persisting, and possibly confused in recollection. While the memories recovered by deep stimulation may be vivid, they are not necessarily all that usefully detailed. There's no reason to think they are "frames" from some life-long "Cartesian movie" of the brain's experiences.

As an alternative to implanted electrodes, it is possible to non-invasively achieve brain stimulation through strong magnetic pulses. "Transcranial brain stimulation (TCBS)" is used to locate damaged regions of the brain from strokes and such; it may also have utility as a psychiatric treatment. It is of course not as focused as implanted electrodes, and has some risk of causing seizures. Some experiments with TCBS suggest that stimulation of a specific region of cerebral cortex can generate a "religious experience" -- but such stories are controversial, skeptics suggesting such experiences as simply provoked by suggestion, and not supported by double-blind studies.

* As for fMRI, it operates by extracting signals from changes in the energetic state of hydrogen nuclei -- protons -- in the water that makes up the bulk of the human body. An fMRI scanner maps the water concentration in tissues, with different tissues having different concentrations, and so showing up as different grayscales in the scan. The water concentration also varies with blood flow; in the brain, blood flow increases in active regions, and so fMRI can give a map of brain activity. In fMRI terms, this is known as "blood oxygenation level dependent (BOLD)" activity.

An fMRI scanner looks like a big drum lies on its side; to be scanned, a subject lies prone, with her head inserted into the center of the drum. The drum contains three layers of elements:

The data from the receiver array is digitally crunched, and then displayed as convenient, with a user able to obtain multiple cross-sections of the subject's brain as desired. The scan starts out with an "averaged" fMRI image of the brain, with specific activity revealed by subtracting the averaged fMRI image. This capability is where the term "functional" comes from in the acronym "fMRI"; instead of simply scanning for structure, an fMRI scanner reveals function as well. In neurophysical research, subjects are asked to perform various tasks, with the corresponding BOLD activity in the brain then observed by the fMRI scanner.

The advantage of fMRI is that it provides non-invasive, three-dimensional mapping of brain activity to a good level of resolution -- without requiring use of troublesome radioactive tracers, used with scanning techniques like positron emission tomography. The primary disadvantage is that the subject must lie prone and still, meaning it's not all that useful for monitoring the brain when a subject is engaged in physical activity. However, Dehaene pointed out that imagining a physical activity activates the brain in much the same way as that corresponding physical activity does -- with that assumption validated by using fMRI to observe subjects performing, or alternatively imagining, simple actions.

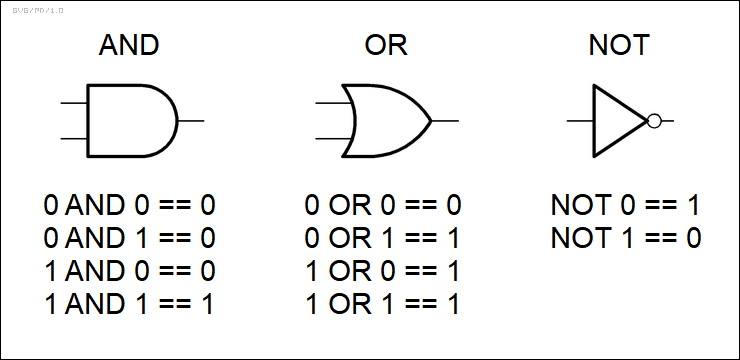

BACK_TO_TOP* While our brain is often compared to a computer, it doesn't work like a traditional digital computer. The central processing unit chip of a stored-program computer is built up of basic switching elements, called "logic gates". The gates accept high or low voltage inputs -- abstracted as a bit, a "logic 1" or "logic 0" -- and then generate a "1" or "0" bit on the output. The gates perform the basic logic functions of AND, OR, and NOT:

All the logic operations on the processor chip are derived from these three basic functions, supported by memory cells storing inputs, outputs, and intermediate data. A chip designer will use libraries of high-level modules, each with a large number of devices, as building blocks for the processor chip.

The processor steps through a series of program instructions in memory, with a train of "clock pulses" keeping the system's operation synchronized. The processor performs operations as directed by the program, possibly jumping to different sets of instructions as per tests on the results of operations: the processor can make decisions. The program is highly deterministic in its operation -- though given an elaborate enough program, it may not be so easy to predict what it will do next.

Many simple digital devices -- a pocket calculator, for example -- only run a single program. A calculator accepts keyboard inputs, then "parses" them to determine what commands a user is entering. When the user presses the "=" key, the calculator performs the specified calculation and sends the result to the output, the display.

A modern smartphone is much more powerful and flexible, allowing multiple different application programs, "apps", to do whatever a user wants: calculator, calendar, address book, messaging, music player, video player, games, whatever. An operating system or "OS" -- the system of programs that runs the smartphone -- allows apps to be installed, run and exited, or deleted, and controls their access to the smartphone's hardware.

The smartphone has much more sophisticated input-output (I/O) capabilities, using a touchscreen and voice for input, the touchscreen and an audio system for output -- and of course can perform wireless communications. It can interface with a wide range of I/O devices through wired or wireless connections, with all I/O devices managed by the OS. The OS can also run multiple apps in parallel, either through time-slicing their execution or, in modern smartphones, multiple processor "cores". Some of the cores may be specialized processors, for example to perform AI operations.

The "biological neural network (BNN)" that is the brain looks nothing like a digital computer. It is easiest to describe a BNN by starting with "artificial neural networks (ANN)" -- in a sense, computers that imitate the brain, following in the pioneering steps of McCulloch and Pitts. ANNs are much simpler and more uniform than BNNs, but operate on the same broad principles. ANNs are made up of an array of electronic "artificial neurons". Each artificial neuron has a set of inputs (in effect, dendrites), with "weights" assigned to each input. If the sum of the weights on activated inputs exceeds a certain threshold -- the threshold can be adjusted -- the neuron fires, generating an output signal that may be distributed to the inputs of many other neurons.

Artificial neurons can be implemented in hardware, software, or some mix of both. A simple ANN, say one to analyze low-resolution images, might have an input grid, accepting images from a camera, with 256 x 256 connections -- one for each camera pixel, 65,536 connections in all. The inputs are connected to a set of neurons; there might be well fewer neurons than the input connections, there might be more. The neural net is organized in layers, featuring in this case, a set of layers to identify "primitives" in an image, primarily lines, feeding layers to identify shapes, and then specific objects from those shapes.

Each neuron is programmed by adjusting its input weights and firing threshold -- typically by "training", in this case generating images for input to the ANN, and telling the ANN what they represent. Practical ANNs have, as a rule, vastly more artificial neurons, and are "deep", with hundreds of levels of neurons.

Even deep ANNs, however, are only simple analogues to BNNs, which are far more disorderly. While a BNN will have layers, they're not necessarily well-partitioned, and there may be feedback between layers, with outputs of one neuron connected to neurons preceding it -- or even back to itself. In short, it's a rat's nest to end all rat's nests, and mapping out every single synaptic connection in the brain, hundreds of trillions of them, would be extremely difficult, probably likely too cumbersome to be useful. In addition, the noisiness of neurons also results in a certain noisiness of the operation of the brain's neural network: although its overall operation can be characterized, reliably predicting what it's going to do next may be problematic.

* It is not, however, necessary to have a complete understanding of the structure of the brain to understand its cognitive functions. There are only a few tens of thousands of genes in the human genome, so it's not like the structure of the brain is determined down to the synapse level. Instead, before birth the brain grows as directed by genetically-determined rules that drive its organization; it continues to grow after birth, with its organization then also determined by environmental stimuli. Infants who are not exposed to language during their formative period never learn to understand it well. Although they had a genetic predisposition to create the neural structures needed to handle language, their stunted development prevented the creation of those structures.

To understand the operation of the brain, we need to understand the specifics of neuron function, and then the "neural codes", the rules by which the neurons are organized as a collective. We know quite a bit about neurons, not so much about the rules -- which remain a challenge. However, even given this ignorance, we can still usefully consider the mind as a reflection of the brain's operation, in much the same way as we can consider the operation of software a reflection of the operation of a computer.

Suppose we have an "Alien Attack" computer game on a smartphone, with the player blasting away at ranks of alien invaders. We can observe the app to determine its behaviors, to see how it works. First, we divide it into elements -- just call them "objects", for convenience -- consisting of the game controls, an icon for the player's ship, and icons for the different alien attackers. For each of the objects, we can specify the attributes of the object -- what its shape and colors are -- and determine the functions of each.

Since there's a set of alien invaders, we can specify as an object the set of all the alien invader objects -- that objecting defining their numbers and types, as well as how they behave as a collective. We can also specify the "game board" on which the pieces play as an object, with its attributes being its size and background appearance, along with rules for how the pieces behave on the game board. At the very top level, we can specify the game as an object that encapsulates all the lower-level objects, and the rules by which the game works.

We have now created a software model of the Alien Attack game that functionally describes the operation of the game. The game was actually written in a high-level somewhat-English-like programming language, which was in turn translated by a "compiler" program into the binary machine codes that actually do the work on the computer hardware. However, if we simply want to know the game's behavior, how it works, all we need is the software model. We don't need any more than a superficial understanding of the machine codes and hardware. If we wanted to implement the game ourselves, the software model could be used as a specification for writing a program to do the job.

The Alien Attack game can be regarded as a "virtual machine". Although the game uses the smartphone hardware to operate, the app only exists as a set of programmed behaviors -- including the generation of icons for objects, the rules of operation of those objects, and the interactions of the objects -- specified by software. We can create other programs to create other games, like chess, or other apps, each being a different virtual machine, operating on the same smartphone hardware. We could create vast numbers of different virtual machines running on the same smartphone hardware.

Earlier, the mind was defined as "the brain in run-time". It is more elegant to define the mind as a virtual machine, a structured system of behaviors generated by the brain's neurons and its higher-level architecture, and no longer worry about the underlying brain hardware unless we need to. Different people have generally similar brain hardware, but they may have very different minds, different virtual machines. As with the Alien Attack game, the virtual machine of the mind can be partitioned into functions that define behaviors -- perception, memory, feelings, thinking, motor control, and so on -- with models constructed on the basis of those elements. We understand the mind to the extent that we can diagram a virtual machine that correctly, and non-trivially, matches the behavior of the mind.

* As a footnote, the software model given for the Alien Attack is based on what is known as "object-oriented programming (OOP)" -- a scheme well-established in modern software design. There are other approaches to devising a software model, but OOP provides a degree of insight into the functioning of the human mind.

Suppose Alice -- of the comedy team of Alice & Bob, who often show up in discussions of physics, cryptology, philosophy, and so on, Bob to arrive later -- sees a cat, she immediately recognizes it for what it is, her perception having been matched to a cat object in her mind. If she doesn't know the specific cat and doesn't pay much attention to it, that's as far as it goes; she has recognized the cat only as a "class". If she recognizes a cat she knows -- Ella, Sam, Max, Fluffy -- she recognizes it as an "instance" of "class cat".

"Class cat" in practice only means "housecats"; we can define a "superclass" of "class felines" to cover housecats, wildcats, cheetahs, lions, tigers, and so on -- with felines leading to the superclass of "carnivores", then "mammals", then "animals". In practice, we also have convenient supersets like "class small_mammal" -- Alice may be able to make out a small mammal in the dark, but not be sure if it's a cat, raccoon, or skunk without shining a light on it -- and then run, if it turns out to be "class skunk".

"Class cat", then, is a "subclass" of both "class felines" and "class small_mammal". It can be a subclass of any class as is convenient: "household pets", "predators", "household expense", and so on. Objects are linked into hierarchies, or "trees", with the higher-level objects effectively encompassing -- or "subsuming" or "inheriting" -- lower-level objects.

"Class cat" includes various "attributes" of cats, such as gender, fur length, fur color, size, breed, and so on; as well as various "functions" that describe the behavior of a cat, such as purring, meowing, catching birds, and so on. Each instance of class cat will have specifically defined attributes: for example, "Ella" is a female, a black shorthair cat with unusually large ears. Of course, each attribute or function can be defined as an object as well; "class color" is an attribute of "class cat", with the "color" of "Ella" being "black". Incidentally, "color" is an "atomic" object, because it's fundamental, undefinable in terms of any other object -- except as an instance of the absolutely fundamental "class object", the "zeroth object", which defines the concept of an object.

Everything that goes through our heads can be defined as an object -- not just natural and artificial material objects, such as rocks, trees, cars, smartphones; but also emotions; symbols, including numbers, signs, and emojis; moral concepts such as "justice"; events; rules and "rule sets"; actions and "scripts" of actions; and whatever.

In addition, saying that objects are linked into hierarchical trees is an over-simplification, since we define links in any way that is useful or desired, such as a link between a cat and the family that keeps the cat. Alice sees Ella, and may recollect the family that keeps Ella. Similarly, if Alice recognizes a particular odor, it may bring up a reminiscence of a particular individual or event in her past.

Links tend to be bidirectional; if we think "dog", we may then think "cat", and if we think "cat", we may think "dog". Each link is a different path, however, and bidirectional links may not be of comparable strength. If we think of Ella, we may easily think of the family that keeps her, but if we think of the family, we might forget about Ella. Yes, there are object hierarchies, but they may only be parts of more elaborate object networks.

Could it be said, then, that the mind is an object-oriented system? Yes and no; it can be seen as one, but forcing the parallel with OOP casts the mind in the mold of conventional computer software, which is misleading and not very useful. Software written using OOP has a well-defined object-oriented structure; with the mind, the object system is no more or less than a convenient means of description of a neural system that is in no way so neatly structured. Nonetheless, OOP does -- with its classes and instances, inheritances, hierarchies, and interconnections -- reflect our cognitive processes. Of course it does, since it was inspired by them.

BACK_TO_TOP* This broad parallel between OOP and the structure of the mind, as well the parallel between biological and artificial neural nets, does suggest that if comparing the brain to a digital computer is misleading, it's not entirely wrong either. Broadly speaking, both the brain and computer are information-processing systems; they obtain a wide range of data inputs, process them, and respond with elaborate and presumably appropriate actions.

More significantly, as pointed out by the British mathematician and computer pioneer Alan Turing (1912:1954), digital computers are "universal machines". Turing had a very specific concept of what that meant, envisioning in essence a primitive computer processor that, directed by its programming, could scan along a tape of indefinite length, reading and possibly writing a sequence of "1s" and "0s", or other symbols, then perform actions as directed by those symbols. However, since such a "Turing machine" can be easily emulated on a digital computer -- implementations can be found online -- generalizations that are true of a Turing machine are also true of a conventional digital computer.

A universal machine is one that can execute any and all procedures that could be devised -- or at least "bounded" procedures, meaning those that don't require indefinite resources or time to complete. An "infinite loop" in a computer program -- a cycle that never ends, possibly gobbling up computer resources until the machine crashes -- is an unbounded procedure.

Traditionally, machines were built to perform a single task, or a limited range of related tasks. In contrast, a computer could -- with the necessary accessories -- perform any task that a special-purpose machine could; it's just a question of writing the software for the computer to create a new virtual machine. It is perfectly possible, as a significant example, for a digital computer to emulate a neural net; throw in a random-number generator, and it can emulate a noisy neural net. A conventional digital computer is not likely to be as efficient as a purpose-built artificial neural net, and implementing the 86 billion neurons in the human brain, let alone their interconnections, is out of the question at the present time.

The human brain is also a universal machine; it can execute any and all bounded procedures. Anyone who denies this can then be asked to identify a bounded procedure that a human cannot perform. That can't happen, since a human can do anything a digital computer can do, but far less efficiently. Indeed, in the days before digital computers, a "computer" was a member of a team of workers, usually women, who spent all their working time performing lists of routine calculations as components of a mathematical analysis.

The brain does well at things at which digital computers do poorly, while digital computers do well at things at which the brain does poorly. The digital computer is, in practice, an aid to the human brain, not a replacement for it. We build intelligent machines to be our servants.

The parallelism between brain and computer also suggests, on examining the Alien Attack game again, there is no more or less a mind-body problem than there is a "software-hardware" problem. All the Alien Attack game consists of is computer codes, stored in the smartphone's memory, being executed on the smartphone's hardware, generating the behavior of the game. All we can know or need to know about the Alien Attack game is the computer hardware, the program code, and the game's behavior.

Developers putting together the Alien Attack game can trace the execution of the game's software on the smartphone hardware, observing exactly what the game is doing for each software command. It makes no sense to ask: "How does knowing what the software is telling the hardware to do explain the game's behavior?" The reply from the developers would be: "We step through the program, and watch what happens."

The only things we can know about the mind are the operations of the brain's neurons and the mind's behaviors, as produced by the neurons. The big difference from the Alien Attack game is that there's much more to the human mind; it is, at present, impossible to trace the correspondence between activation of specific neurons and resulting behavior in useful detail. Nonetheless, we have no good reason to believe that the behaviors of the mind are the products of anything but the firing of neurons.

BACK_TO_TOP