* Given the concept of the human mind as the brain's virtual machine, running a world model, then how does it work in practice? The operation of the mind can be readily seen in mundane activities, such as shopping, or traveling, or exercising, or working through the day. That leaves the question of how consciousness fits into the human mind; but any close-up analysis shows consciousness to be an integral, and inescapable, aspect of it.

* If humans are not machines, they are at least mechanistic, in that their behavior follows a rhyme and a sort of reason. They think things out, they get things done. As a simple example, every week Alice makes up a shopping list for a trip to the supermarket, writing it down with pen and paper.

Alice goes through the kitchen and bathroom to see what she's running out of or has run out of, to write up a list of what she needs to buy. She may have also left an empty box or bottle on a countertop to give her a reminder, and may also check her pile of store coupons to see what she can get a deal on. She ends up with a cognitive network to guide her shopping trip.

The next day, she drives to the supermarket, guiding her car through traffic; she's very used to driving, and for the most part, she follows a script acquired by long experience, executing one action after another. Most of the time, when something happens and there's nothing surprising in it, Alice doesn't have to think it over -- she just knows what to do next. If she runs into something unexpected, for example a detour due to road construction, she has to rethink her normal drive. She thinks it over for a bit, considering alternative routes from the part of her world model that handles roads, and selects one that seems workable.

At the supermarket, she picks out an available parking slot; she prefers to park in the same area of the parking lot each week, so she can remember where to find her car. She then goes into the store, gets a shopping cart, and then rolls through the aisles, using the loose map of the store that she has in her head. She's confronted with a combinatorial explosion, tens of thousands of products, and necessarily only pays much attention to those products and brands she cares about.

She does know where to find classes of products, though sometimes the store organization is confusing -- particularly when it's been rearranged, requiring her to re-learn parts of the layout. If something's been changed, she may be confused for a moment, not being certain that there was a change from the last time she visited. She doesn't have a photographic memory.

While shopping, she has to review the shopping list repeatedly to make sure she hasn't overlooked anything; occasionally, she still forgets something anyway. Human cognitive networks are not necessarily efficient and not necessarily repeatable in their operation, so Alice has learned to incorporate error checking, double-checking, and petty tricks to ensure that her plans work more effectively. One trick she's learned is to draw a little star or other doodle next to a list item that isn't something she normally buys, so she won't forget it. She also checks most or all of the aisles in the supermarket, on the chance:

If she sees an item she didn't plan on buying and finds it interesting, she then has to evaluate whether she wants to buy it or not. Her calculation for that decision is based on a balancing of all the relevant factors that come to mind. This is not anything like an ordinary arithmetic calculation, but it does resemble a scheme of calculation known as "fuzzy logic".

There are different ways to perform fuzzy logic, but they are all based on the fact that humans tend to think in terms of ranges, not specific values. Alice has three general criteria in considering the item: EXPENSE, PERCEIVED VALUE, and PERCEIVED DRAWBACKS. Each of the three can be rated in five categories:

ZERO / NEGLIGIBLE LOW MEDIUM HIGH EXTREME

If the EXPENSE and the PERCEIVED DRAWBACKS are LOW, then Alice will buy the item even if the PERCEIVED VALUE is LOW, or anything greater than that. If the EXPENSE is HIGH and the PERCEIVED DRAWBACKS are LOW, or the reverse, then Alice will only buy the item if the PERCEIVED VALUE is HIGH as well. And so on.

This is a very simple-minded read on fuzzy logic -- more realistically, for example, different criteria may have different weights, some ranging only from ZERO to LOW, others ranging from ZERO to MAX, with nothing in between. Alice's reasonings in the supermarket are also informal, "heuristic", rules of thumb acquired mostly by experience, and not guaranteed to give an optimum answer, or even a very good one. Ultimately Alice's evaluations tend to be based on feel, on what she wants, which may change from moment to moment.

This is how Alice makes decisions, on the basis of the balancing of factors, that being one of the basic functions of her brain neural network. Every time she goes to the store, she plays the same sort of balancing acts to make decisions:

Sometimes Alice doesn't even really think it over: she sees something she likes, she checks the price, then puts in her shopping basket. There's a sort of fuzzy reasoning process there, but it just happens all at once, in a flash of insight. It's like picking up a piece of jigsaw puzzle and knowing it fits.

Human reasonings are typically fuzzy. It's amusing to read Sir Arthur Conan Doyle's old SHERLOCK HOLMES detective mysteries, watching the supremely logical Holmes tracking down criminals -- but eventually, it becomes obvious the stories are not much more than parlor games, with well-defined clues that lead to unambiguous conclusions. That isn't how real-world criminal investigations honestly work.

If a crime doesn't have an obvious solution, investigators collect forensic data, including testimony of witnesses, and try to find a pattern in it. If they can follow it up and arrest a suspect, they can obtain more data, to see if their suspicions are supported by the evidence. If they are, they can put together a case to present in court. The case may not be air-tight on the basis of evidence, with a jury deciding the outcome.

This process has little resemblance to formal logic; it is prone to bias and error -- but it's hard to do any better in the real world. If a conviction required a mathematical level of proof, there would be very few convictions. In the same way Alice, even confronted with as straightforward a job as shopping at the supermarket, could not possibly reduce the task to a rigorous scheme of logic.

Formal logic amounts to another cognitive network in the brain, featuring well-defined assumptions and neat rules, and the real world usually doesn't grant us either. Even if we do have things laid out neatly for us, we may not apply our reasoning correctly, humans not being all that well-tuned to formal logic. Any serious exercise in formal logic requires that we sit down and take the time to do it right.

Yes, our heuristic thinking does involve a certain logic, but it also involves insight, hunches and guesses, plus a good deal of "feel" -- arranged in a much less rigorous cognitive network. There are so many products at the supermarket that Alice can't know very much about most of them, she has to make decisions on the basis of limited information, and it would be an obsessive waste of effort to try to nail it all down. She comes up with rules of thumb to do the job, and keeps refining them over time.

Alice's shopping list is a guideline for getting the job done. Circumstances may lead to deviations from the plan, and having to think up modifications on the spot. Nonetheless, the shopping trip rotates around the written list. Some people have very good memories, but few could reliably remember everything on a shopping list of any length. This is one of the significant differences between human and animal intelligence: humans have developed tools to assist them in thinking, in this case pen and paper, which act as an extension of memory.

Alice doesn't have many other tools for the task. She can check online in advance for sales or attractive new products, or maybe shop online and have the groceries delivered. Future stores will also automatically observe what she puts in her shopping cart; send helpful messages, for example on sales, to her smartphone; and electronically bill her when she goes out the door. However, although machines can influence her purchases, they can't tell her what she wants to buy.

BACK_TO_TOP* For a more elaborate example, imagine that Alice has decided to take a road trip, a vacation, possibly to attend a special event at the terminal end of the trip. Having made the decision to go, she devises the plan, determines the route from maps, identifying night stops and visits to attractions along the way. Given her agenda, she gets online and determines:

Alice's trip plan ends up being a cognitive network linking together locations on the trips, attractions at locations, fuel, food, hotel accommodations, prices, and so on. Since it's difficult for most people to keep track of all the details in the head, she writes them down, or possibly builds a spreadsheet to allow costs to be conveniently summed up.

Alice's ability to construct her trip plan is dependent on her previous experience in planning and taking trips. If she lacks experience, she may not be aware of important factors missing from her trip plan; if she's methodical, no doubt she's written a checklist for the task, and updates it as she acquires more experience. However, if she's going to a place she hasn't been before, she knows she won't be able to precisely plan her actions, and will of necessity have to improvise on the spot to get things right.

Her ability to construct a plan also dependent on her imagination and problem-solving abilities. In evaluating alternative routes and schedules, she performs a mental simulation, at some level of detail, of taking alternate routes and visiting alternate locations. The more experienced she is in trip planning and taking trips -- able to recall lessons learned -- the more competent she will be in setting up a new trip plan. Again, given the noisy brain, she may have failures of imagination, missing options that seem obvious in hindsight; or she may have leaps of insight that give her options that don't seem at all obvious in hindsight.

After she comes up with a trip plan, she'll gradually refine it, finding shortcuts or new options and revising the plan accordingly. She deals with the plan in a hierarchical fashion, having a general understanding of it, then focusing on elements in detail, to as many levels of detail as needed. She checks it for consistency and seeks optimizations -- both of which reflect other fundamental operations of the brain's neural net.

Coming up with a plan that is fully consistent or optimized is problematic -- a combinatorial explosion making that troublesome or effectively impossible -- but attempting to come up with such a perfect plan would likely be another exercise in obsession, with benefits not worth the additional effort. Alice, in planning her trip, is solving a variation of the "traveling salesman" problem, in which a salesman has to visit a set of sales prospects, and needs to optimize the route. That gets ever more complicated as the number of stops in the route and factors involved increases, and becomes ever more difficult to solve.

However, Alice doesn't need a perfect plan, she just needs one that she finds satisfactory and has no big holes in it -- so she gets by with heuristics, rules of thumb, and patches together a plan. Sometimes, of course, Alice won't be able to come up with a satisfactory plan; she sleeps on it, and decides: "This is no good." She may give up on it, or come up with a new plan, a new cognitive network, that may incorporate elements of the old plan.

* Now suppose Alice finally goes on her road trip, and in the course of one segment of it, spends a day driving down the freeway. For much of the journey, she's passive, simply perceptive to her environment, maintaining control of her car, not really thinking unless something comes up -- an obstruction in the road, an animal crossing the road, some interesting feature alongside the road -- that is then assessed and, if necessary, acted on.

For example, if she sees a heavy cargo hauler near her, she may think: He's bigger than I am, give him some space. -- factoring in considerations such the possibility that the driver of the big rig isn't aware she's there; the threat of being sideswiped or cut off; the possibility that the big rig may throw up rocks in her windshield; and the broader reality that the presence of the big rig puts constraints on her own ability to maneuver on the road. She has to evaluate potential threats, on occasion some that aren't immediately obvious, and consider options for how to react.

Once an issue that comes up is resolved, Alice goes back to a passive mode. She may listen to music or an audiobook to pass the time, and may think about other things, for example what she plans to do once she reaches her destination. Her brain's parallelism allows her to think about such things and drive -- though if something comes up, like a big rig rolling onto the freeway near her, that takes priority in her consciousness, with the music or whatever ignored until that issue is resolved.

Part of her mind is always focused on driving; if she gets distracted, for example in playing with the car's sound system, she may realize with alarm that she's forgotten she's on the road, knowing that could be fatal. She has to be more attentive when driving in a strange place than she does when driving around at home, since she doesn't have the same familiarity with the road network -- doesn't know exactly where she's going, doesn't know where the road hazards are. She finds it particularly challenging when she drives into a big city, with heavy traffic and an unfamiliar, often confusing road network. It may be so challenging that she has to turn off the music, since it's too much of a distraction.

Fortunately, Alice is a safe and careful driver. That means she doesn't pay so much attention to things not related to driving safely. As she drives along, she only selectively soaks up what she sees. At any one time, all she has is a minimal set of abstracts in the model, consisting of the road, vehicles on the road, and the landscape, for example an evergreen forest along both sides of the road. As new elements appear in the scene, she recognizes them and incorporates them into the world model. As signs appear, she glances at them and assesses whether they say anything of interest relative to her journey; if not, she gives them no further thought, if so, she updates her journey plan accordingly. If houses appear, Alice incorporates them into her world model without further thought; she'll only take particular notice of something if it is unexpected in her world model, for example a giant statue of Mickey Mouse.

While Alice may perceive her experience driving down the road as something like a movie without interruptions, that isn't the way it is. In the end, she really notices not much more than she needs to notice, and hardly remembers most of the journey. She may miss significant details, if they aren't drawn to her attention. In simple terms, Alice is as perfectly aware as she needs to be to get the job done -- but not much more than that.

BACK_TO_TOP* While Alice is traveling, Bob is at home, going about his daily life. His day is a sequence of usually simple activities, performed more or less automatically. For example, if Bob is in the bedroom and wants something from the kitchen, he walks to the kitchen and gets it. He doesn't construct a plan to do it; he just does it. He's certainly aware of what he's doing, but he doesn't have to think anything out. All he has to do is watch where he's going.

As David Hume pointed out, we don't really know how our motor actions take place: we decide to do something, and then we do it. If it's an action different enough from those we are used to, we have to try different things until we figure out how to do it. As a newborn baby, Bob knew how to move his arms and legs, but he just moved them around aimlessly. He had to struggle to learn the simplest things, such as walking. His parents could encourage him, but he essentially had to figure it out on his own.

In our maturity, we tend to forget such troubles. Bob types away energetically at a computer keyboard, simply thinking of what to write, usually without any concern for how his hands do the job. He can even chat with Alice while he's typing. We do still notice how hard it is to master our motor actions when we do something difficult, like juggling.

Bob, as part of his daily exercise routine, juggles a few minutes every day. At least for the basic juggle patterns, they're simple -- in effect, throw a ball from right hand to left, throw a ball from left to right, catch a ball with the left and throw it back, catch a ball in the right and throw it back. Although there may be people so coordinated that they can be shown how to juggle and then do it, that isn't how most people work; they'll fumble again and again, until they start to catch on. There's no way to tell them just how to get their hands to work. With trial and error, with practice, eventually the proper motions will be recognized, and then become reflexive, habitual.

Once a stable, rhythmic juggle pattern is put in motion, it runs on automatic, the hands doing all the work without any specific supervision. It couldn't be done consciously, it's too fast. There's a perceptible latency in awareness, tests showing it's about a third of a second at minimum -- well longer, if the subject has to shift concentration from one thing to another. By the time Bob is aware that he's caught and re-thrown a ball, the event's over.

However, Bob has to concentrate on what he's doing, or he'll drop the balls -- incidentally, we can't describe how to concentrate either, we just do it or not. Bob, as he juggles, has a general idea of what to do with his hands, but he doesn't think out their movements in detail.

The mind operates, in terms of any actions we take, in a "predictive" fashion, it's concerned about what to do next. Whatever Bob did in the past is memory; whatever he's doing now is merely assessed to see if corrections are needed. He's certainly aware of the smooth rhythm of the motion of the balls, throwing the balls higher or lower to adjust speed to "adapt" his actions, and reacting to an anomaly, recovering from a fumble. Of course, the recovery is also reflexive -- experience has taught him how to do so, he can't think fast enough to do it consciously.

Bob also pays increasingly close attention to details as he tries to juggle, identifying any alteration in his movements that improve on his juggling, spotting blunders that cause him to drop the ball. The more closely he focuses on the details of what he's doing, the more progress he can make in the effort.

* Juggling, of course, is only a trivial part of Bob's day. He spends the rest of it jumping from one task to another, in a more, or sometimes less, orderly fashion. To an extent, he follows a schedule, but that's only a framework for the day. No one of his days is exactly like another, and sometimes his schedule is disrupted by events.

Although his plan for the day focuses on tasks he needs or wants to get done, he's only single-minded in bursts; otherwise switching between tasks, or spontaneously diverted to other lines of thought -- possibly whimsical and amusing ones of no particular value, enjoyed for a moment, until Bob decides to get back to work. In the course of his labors, he's always learning a little bit of something new, figuring out ways to do things better, considering new and different options.

From the moment Bob gets up in the morning to the time he ends his day at night, he has a stream of consciousness that takes him through the day, if not in a straight line, repeatedly posing and answering the question of: "What do I do next?" Most of his actions are directed by habit -- but intermittently, he needs to think things through a bit, and sometimes sit back, taking the time to figure something out.

During the day, Bob works through his scheduled tasks. It's not a continuous process: he may be interrupted, take a break, lose track of what he's doing, or just sit back and think things over. During the day, sometimes he may feel bored, engrossed, grumpy, amused, or excited -- but most of the time, he's just plodding through his work. Bob goes from one mental scene to another during the day, until he finally decides to stop and go to bed. This is not how any machine works, or we would want a machine to work.

Bob is mechanistic, in that his mind works by rules that can be understood in principle, but in no way does he really resemble a machine. Once more, humans evolved; they were not built according to a specification. Bob is an autonomous agent, trying to cope with what the real world throws at him, learning how things work, developing procedures accordingly, and sometimes finding that he gets things wrong. His actions are under the control of a wide-ranging -- but inconsistent and open-ended -- world model that evolves, as he works to get a better grasp of the world. Bob does think things out, and generally does a good job of it; but ultimately, the lifestyle he has is the one that suits his preferences. Bob could choose between a wide range of different lifestyles, one generally being as good as another in its basis in facts, and has the one he does, for no other fundamental reason other than that's the one he feels he wants.

* Bob makes use of tools to support his lifestyle. He has schedules for his activities, paced by his watch -- and, when necessary, checklists. He tries to log information, being particularly devoted to his smartphone, which allows him to keep text or voice notes, as well as index and access useful information online. Of course, he can also use it to call up people for information: "Do you have this item in stock?"

Bob, an enthusiastic photographer, really likes the smartphone's camera; he doesn't have a photographic memory, nobody does, but he takes photographs, and he spent extra to get a smartphone with a good camera. A photograph captures far more detail than Bob's eyes do, often revealing things to him later that he didn't see at the time, and provides memories that are far less ambiguous and inaccurate than his own. He takes pictures of things he wants to remember or investigate, and sometimes sends them to others to aid in communications. He can provide voice or text annotations to his shots.

Some who know Bob find him excessively structured in his activities -- but he is still selective in recording them. He has to be, since the real world confronts with a combinatorial explosion of details that would overwhelm him if he tried to nail them all down, so he records what he feels he "needs to know". His photographs, though accurate in what they record, are selective; they only capture literal "snapshots" of the world, things Bob thought worth remembering.

There are people, called "life loggers", who actually try to digitally preserve every moment of their days. Of course, this is attempting to take on the combinatorial explosion of the real world, resulting in the accumulation of video, voice, and text files, the pile becoming increasingly overwhelming and unwieldy as it gets bigger -- making it very difficult to organize, or to find any one thing of interest in it. As discussed below, life loggers may be simply a bit ahead of the learning curve.

In any case, Bob's use of his smartphone to keep track of his life is another example of how machine intelligence complements and extends the human mind. The smartphone has only a minimal need to remember or learn things for its own use; its function is to remember things for Bob, and extend his reach into the internet. It is far beyond the imagination of Descartes.

BACK_TO_TOP* Having pieced together this brief, and necessarily superficial, survey of human cognition, we can then contrast the notion of consciousness in this vision with that of the Cartesian theater. As Dennett has pointed out, a close inspection of cognitive processes shows there's no reason to believe in a homunculus, a Harvey, watching the Cartesian theater -- but we do have a set of agents, specialized neural processors, each agent optimized for one task or set of tasks, attending to the various processes of the mind.

These agents are operating more or less in parallel, more or less in competition, sending multimedia messages -- abstracts -- to each other. Instead of one homunculus, we have a collective of specialized homunculi engaged in a group discussion, each one competing as an "opponent process" to address the collective in the stream of consciousness. One takes over with its message, to then be displaced by another in turn. Decisions are made through the interaction of the collective, with one agent getting its way and the others deferring.

The pioneering AI researcher Oliver Selfridge (1926:2008), in a 1959 paper, proposed a similar scheme for machine decision-making that he called the "Pandemonium" architecture, in which a set of "demons" -- agents -- competed with each other for control of the machine. "Pandemonium" was the capital of Hell in John Milton's epic poem PARADISE LOST of 1667, and the vision of demons arguing among themselves about what to do next captures the chaotic nature of the mind's decision-making process, with distinct agents in ongoing contention.

Order ends up emerging from the chaos, the resolution of the disputes yielding a sequence of actions that are, at least when things are going well, surprisingly coherent and efficient, the scheme being known as "contention scheduling". The specialized agents make up a "community of mind" that is capable of sophisticated behaviors, even though each individual agent is simple-minded.

How can the sophisticated behaviors of the human mind be produced by such a jumbled and, on the face of it, inefficient system? In the first place, evolution doesn't produce nice neat designs like human-made machines; they may seem very disorderly. Notice the use of the word "seem"; in the second place, evolutionary designs can be surprisingly efficient, sometimes because they aren't like human-made machines.

William James, in an 1879 essay, examined the behaviors of "lower animals", like frogs, with James saying their behavior is "determinate", robotic, dominated by reflex, limited in adaptability. That reflexive behavior is carried over into the "reptilian brain", the parts of our brain inherited from our distant ancestors -- but as far as the parts of the human brain that were much more distinctly human went, James observed:

QUOTE:

... the most perfected parts of the brain are those whose action are least determinate. It is this very vagueness which constitutes their advantage. They allow their possessor to adapt his conduct to the minutest alterations in the environing circumstances ... An organ swayed by slight impressions is an organ whose natural state is one of unstable equilibrium.

END_QUOTE

In short, the seemingly chaotic Pandemonium architecture gives the human brain far more flexibility and ability to respond to circumstances than the reflexive frog brain. There's a "Darwinian" system to the brain, with competing agents "doing their own thing" spontaneously and not necessarily predictably -- with the community of the agents selecting behaviors that are most useful, or at least most interesting, through a voting system of sorts.

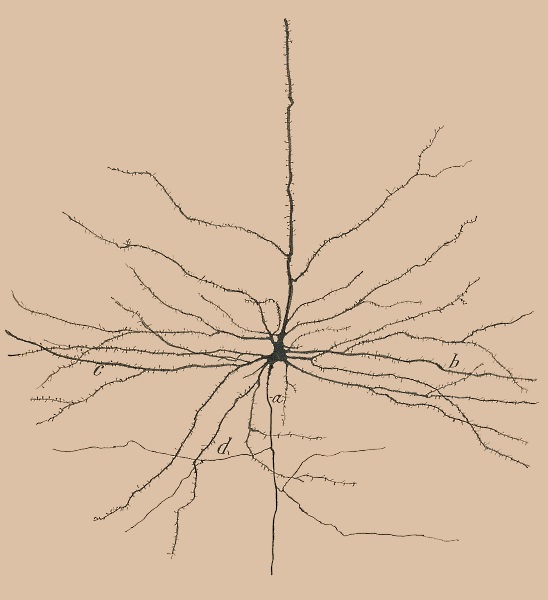

Along with flexible behavior, the Pandemonium architecture gives the way out of the distribution quandary posed by Leibniz: the brain is organized into subsystems, agents, each of which is further subdivided into associations of neurons -- Hebb's cell assemblies -- and ultimately down into individual neurons that each match a pattern. That breaks out of the infinite regress of the Cartesian theater, envisioning a watcher with an inner watcher, with the inner watcher having an inner watcher, and so on forever. Instead, the operation of the elaborate whole of the brain is divided down to the operation of a diversity of simple elements, just as with the mechanical clock.

In a sense, the neurons in the brain act as a "swarm intelligence" -- that is, like a beehive, a system in which the elaborate organization of the nest is derived from its individual similar bee elements, each of which has a small set of limited behaviors. Dan Dennett was fond of the notion of "competence without comprehension". Individual neurons in the brain effectively only do one thing, each mindlessly matching a pattern and firing, with the connected whole being much greater than the sum of the vast number of minuscule, highly interconnected parts.

Dennett's critics were inclined to claim he didn't believe comprehension really existed, but that's nonsense: the entire system of the mind comprehends, but no individual neuron has comprehension, any more than the individual pieces of a clock have some sort of "clockiness" in them. The brain's agents communicate among themselves sending messages over a broadcast network, with schemes by which one of the agents gains priority over the others, dominating the network with its conscious thought, to then be displaced by another in turn. Dennett called this "fame in the brain" -- one agent manages to get its moment on center stage. It's like 1960s pop artist Andy Warhol's gag about everyone, in the future, being famous for 15 minutes, with each agent having its time on stage, quickly yielding to another.

Dehaene and his colleagues saw "fame in the brain" as established by a "global neuronal workspace", an idea originally devised by Dutch cognitive researcher Bernard Baars (born 1946), with his concept now known as "Global Workspace Theory (GWT)" or "Global Neuronal Workspace Theory (GNWT)". Ramon y Cajal noticed in his studies of neurons and the brain that, while most neurons in the brain were fairly short, forming only local connections, the upper layers of the cortex featured dense networks of long-range neurons -- which became known as "pyramidal" neurons, with a central core spanning out to regions separated by centimeters.

The pyramidal neurons establish a global broadcast network to link the brain's agents together. Dehaene's studies showed that the activation of this cortical network is correlated to conscious awareness of a stimulus. The signal associated with the activation of the cortical network in an "avalanche" or "cascade", is known as the "P3 wave" -- because it is preceded by two earlier waves -- or the "P300 wave" -- because it takes place about 300 milliseconds after the stimulus. That's where the one-third second delay in conscious access comes from.

This network is why we perceive a unitary mind. In split-brain patients, the unitary mind is more or less cut in two, with communications between the two brain hemispheres rendered awkward and troublesome. In an intact brain, we have a single message being sent over a global broadcast network, providing a unifying signal to all the mind's agents, with some of them enabled for the moment, some of them suppressed. Dennett, an amateur sailor, liked to call this signal: "All hands on deck!" -- though it seems that he got that catchy phrase from others.

The unity, however, is imposed on a system whose elements tend to go off in different directions at once. Since there is only a single stream of consciousness, the various agents in the system end up dynamically compromising with each other, each making a contribution as the collective allows, with the contributions selected in an untidy fashion.

The untidiness is not so noticeable when we are going about our business, but it becomes obvious in the course of dreaming, in which the unfocused mind jumps, with little rhyme or reason, from one bizarre (abstracted) scene to the next. When we're up and about, we do manage to more or less stay on track, though occasionally we suffer from confusion, not being able to sort out the conflicts between the agents. For those unfortunates who can't sort them out, the result is persistent confusion, schizophrenia.

This scenario also suggests the line between conscious and unconscious: if one of the agents is doing its own thing, such as a reflexive action, there's not much need for conversation with other agents, though the action has to be monitored for accuracy and predictive control. There's also no need to know about the internal operation of an agent.

* For an example of consciousness at work, Alice plays the piano. When she starts out learning a new piano piece, it's a laborious and self-conscious exercise, reading the notes, trying them out on the piano keys, then repeating until she starts to feel comfortable with the piece. When she's really comfortable with the piece, she plays without thinking about it -- in a Zen fashion, totally attentive and absorbed, while her hands seem to play on their own -- directed by an unconscious process, an agent, that she's only indirectly aware of.

She can't think over what she's doing, it happens too fast to permit it; if she becomes too self-conscious of the playing, she goes off the rails. She can only think to play faster or slower, more softly or energetically, and notice errors for future correction. If she runs into a section of the piece that she doesn't play quite right, all she can do is be as attentive as possible while she repeatedly practices it, on the basis that her brain will figure it out sooner or later -- too often, later.

To play the piece well, she has to maintain focus, defying the competition between the various agents of her mind for her attention. If some agent in her mind interrupts her train of thought, she tells the agent to be quiet -- and since it's all part of the same system of mind, it usually goes quiet. If she's agitated or otherwise distracted, with persistent interrupts by agents, they may not shut up so easily; in the worst case, they will completely disrupt her practice. Alice may be able to momentarily shift attention, for example to check her watch for the time, without disrupting her performance -- but if her train of thought is fully diverted, her playing will continue on momentum for a moment; then crash and burn.

Alice's stream of consciousness is all about supervision, keeping track of what's happening and what she's doing, then deciding as a collective to shift gears in her actions as needed. As far as her actions themselves go, at least once she's practiced in them, she doesn't think about them in detail.

It's much like what goes on when Alice is using a pocket calculator: she decides what numbers she wants to multiply, punches them in on the keyboard, then presses the EQUALS key. The calculator, in response, performs the calculation out of sight, then displays the result. Alice doesn't care how the calculator does the job, all she knows or cares about is punching in the numbers, pressing EQUALS, and then getting the result.

It works much the same way if Alice performs a simple calculation in her head. If Alice is asked, say: "What is 6 + 5?" -- she sets up the addition in her mind and then gets the answer back -- "11" -- with no knowledge, or need to know, of the intervening steps. It's effectively a "look-up table" operation, since she had memorized the addition table for the numbers 1 through 10 in elementary school. For a more complicated calculation -- "What is (3*7 + 11)/2?" -- she has to think it out in three steps, just as if using a calculator, getting intermediate results "21", then "32", and finally "16". If the result were fractional, it would require even more steps of conscious thought.

On such examination, consciousness doesn't seem like it amounts to as much as we've been conditioned to think it does. As Dennett put it, we are inclined to believe we have a much deeper understanding of our thought processes than we actually do, but that's another illusion. As he said: "We have underprivileged access to the properties of our own mental states." Dehaene quoted Princeton psychologist Julian Jaynes (1920:1997): "Consciousness is a much smaller part of our mental life than we are conscious of, because we cannot be conscious of what we are not conscious of." ' Well yeah, of course we're unaware of things we're not aware of. Actually, that's not completely true, we can observe our own behaviors that reflect the subconscious mind -- as Alice does, as she reflects how her hands, dancing over the piano keyboard, seem to be operating with a mind of their own. They are; they are tightly coupled to one of the mind's agents, devoted to the playing of the piece, and not in open communication with other agents. Some of the other agents, meanwhile, are keeping an eye on things, providing guidance to the agent that does the playing.

There is, on examination of what is going on, no reason to think of consciousness as magical, instead seeing it as a phenomenon with observable operations, characteristics, and limitations. Some people fuss about the subordinance of the conscious mind, but why? The bottom line is that we are exactly as conscious as we need to be. If we otherwise get the job done right without it, where's the problem? The only problem is that people over-rate consciousness.

BACK_TO_TOP* Having discussed Dennett's notion of "fame in the brain", it must be added that he liked to say that "consciousness is an illusion" -- and of course, he got back loud howls of protest in response. Well-know neuroscientist Christof Koch (born 1956), in an essay published in SCIENTIFIC AMERICAN magazine in 2018, commented:

QUOTE:

Many modern analytic philosophers of mind, most prominently perhaps Daniel Dennett of Tufts University, find the existence of consciousness such an intolerable affront to what they believe should be a meaningless universe of matter and the void that they declare it to be an illusion.

END_QUOTE

The reply to this was given by Dennett a few years earlier:

QUOTE:

The best philosophers are always walking a tightrope where one misstep either side is just nonsense. That's why caricatures are too easy to be worth doing. You can make any philosopher -- any, Aristotle, Kant, you name it -- look like a complete flaming idiot with just a slightest little tweak.

END_QUOTE

In reality, Dennett was not a "flaming idiot", he wasn't saying there's nothing there. What he meant is that the stream of consciousness is not a Cartesian theater, it's much more like a performance of stage magic: "The hand is quicker than the eye!" -- and: "Nothing up my sleeve!" One might wish that Dennett had made himself clearer on this point; then again, he was very fond of invoking the clever illusions of stage magicians as explanatory examples in his work, and readers like Koch might also have been more attentive, instead of leaping to caricatures. Dennett didn't like to talk down to readers, preferring instead to assume they are intelligent. Sometimes that assumption was wrong; Dennett understood that, and accepted it as the price he had to pay.

It might be less troublesome to say that consciousness, instead of an "illusion", is "abstracted", or better yet "representational". Consider, as a useful illustration, a classic hexgrid strategy game. Such games feature a playing board in the form of a grid of hexagons, defining the map of a battlefield, with each hex defining a particular class of terrain: open ground, hill, mountain, swamp, lake, watercourse, and so on. The playing pieces are little squares of cardboard, marked with an icon for the combat element -- military units, or weapons like tanks -- plus numeric factors like the firepower, defensive capability, and mobility of that combat element. In the current day, they are often implemented as computer games, with the playing board and pieces usually represented in a more elaborate fashion.

In other words, the game is a highly rendered-down abstract of an actual battle. However, despite the abstracted nature of the game, players can become deeply engrossed in, and emotionally committed to, their bloodless battles, as if they were actually engaged in combat. Such games, incidentally, may be based on historical battles -- the battle of Gettysburg, the battle of Waterloo, the Normandy landings -- or be purely fantasy -- fighting alien invasions or zombie infestations. The representational gameplay works much the same whether the subject of the game is historical or fantastical.

We perceive the world around us in a highly representational fashion. Dennett liked to compare consciousness to the user interface on a personal computer or a smartphone: an abstracted system representing and permitting control over the smartphone's resources. We have file icons, mail icons, trashcan icons, game icons, but there are no physical folders, mail envelopes, trashcans, or game boards in the smartphone -- the icons are just a "user illusion". The personal smartphone is a virtual machine environment; that's obvious when playing a game like Alien Attack, not so apparent but just as true when we're typing up notes, or sending a message, or checking a calendar.

Apps and documents are all just bunches of bits, rendered manageable with icons and other representations that we use without difficulty, taking them for granted. However, the abstracted nature of the user interface becomes obvious in the case of the computer-phobic, who can't make the leap of visualization needed to use such a machine; they don't have a workable cognitive model, they simply see meaningless cartoons on the smartphone display, and end up ineffectually "pushing the buttons", while remaining hopelessly lost.

Along the lines of a personal smartphone's user interface, we could also compare the stream of consciousness to the cockpit of a modern fighter jet. Such a cockpit might have a large touch-sensitive display on the dashboard, with the pilot also having a display in the helmet visor -- flying the aircraft using throttle and stick, with controls mounted on each. The display system will indicate current aircraft and flight status, plus progress of a predefined mission plan; and most importantly, a model of the battlespace around the aircraft.

The battlespace model is informed by the aircraft's navigation / combat system, which gives its location on a map stored in memory; as well as inputs from radar, day and night optical systems, threat-warning systems, and datalinks from other platforms -- with the fighter's computer system performing "data fusion" to display a map for the pilot, reflecting the current landscape below and marking threats, like adversary missile launchers or fighters, as well as targets and friendly forces. Those who develop such systems call them "video games for keeps".

Okay, the fighter cockpit system is not different in kind from the smartphone interface, and both have exactly the same flaw: as Dennett would have admitted, they evoke the Cartesian theater. In the case of the fighter cockpit, the pilot -- we'll call him Major Jack "Thunderbolt" Harvey -- sits on an ejection seat, monitoring the world around the fighter through the displays. The advantage of the fighter cockpit scenario is that we can ground the pilot, and design the aircraft to fly itself as an autopiloted drone.

The system flies missions generally the same as before, but it's not like the autopilot needs displays; it's just fed inputs directly, and acts on them as necessary. In normal operation, the autopilot -- we'll call him "Otto" -- keeps track of aircraft and flight status, using the navigation system to determine progress of the mission plan. Otto has no use for displays; the data represented on a display to Major Harvey is instead sent directly to Otto as digital messages -- in effect, abstracts -- that tell him what he "needs to know", and nothing else. If a warning system detects a threat, it sends a message to Otto; he evaluates the specifics, and then responds with countermeasures.

From the abstracted messages, Otto builds up a world model, or more properly a battlespace model, to perform his mission. Of course, the battlespace model includes a sense of self, with Otto monitoring aircraft systems, as well as his location, heading, altitude, and speed. Otto accordingly has "sorta" a stream of consciousness -- unlike a human's, completely limited to his job, his "need to know" -- to carry out his mission. He does not, however, have a Cartesian theater. Neither do we, but we're inclined to believe there's one there. Of course we trick ourselves into thinking we're viewing the entire world around us in a smooth continuity; we'd go nuts if we were continually aware of the jumpy, fragmentary, and minimal nature of our stream of consciousness. We focus on what we "need to know", and that carries us along.

Certainly, if we don't have a Cartesian theater, yes we do have a stream of consciousness -- as Dennett freely admitted. It's not so much a fiction, it's more like a historical documentary. Historical works are never mere lists of factual data; or if they are, nobody wants to read them. Even the best historical works consist of selectively-chosen facts integrated into a narrative, as constructed from the author's world view. A really good work of history tells a compelling and coherent story, never mind that it's imposed on a reality that simply isn't so orderly.

Consciousness is an illusion? OK, no more or less to the extent that we believe we're comprehensively wired up to the world around us, when all we've got is a selective and fragmentary view that we judge far more complete, detailed, coherent, and accurate than it really is. We are aware that we are aware, but we're not as aware as we think we are. And yes, as shown by the DK effect, there's nothing in it that rules out delusion -- believing there's something there, when there's no honest reason to think there is.

BACK_TO_TOP