* The creationist information theory / complexity argument against MET has an appearance of solidity. However, on close examination, it quickly evaporates.

* As discussed in the previous chapter, as far as contemporary information theory is concerned, the amount of information in a string depends only on how disorderly the string is. Given a bitmap image, the compression ratio only depends on the randomness of the image, it doesn't depend on what the image is -- the image could be of anything, even pure noise, and in fact noisy images have high information content. The compression algorithm is completely indifferent to the "meaning" of the image; information theory says nothing about it.

Creationists have realized that the definitions of information as provided by information theory don't provide a good basis for their criticisms of MET, and they have increasingly turned to concepts such as "functional information" or "complex specified information" that factor in what the information actually does -- as opposed to what might be called the "raw information" or "unspecified information" measured by conventional information theory. From the point of view of mainstream information theory, the idea that the "code" of the genome contains "information" while a snowflake does not is meaningless. When confronted with this fact, creationists reply: "We're not talking about raw information. We're talking about functional information."

The notion of "functional information" seems plausible, and it's certainly possible to provide measures of it -- but only for very specific cases, and for narrowly specific purposes. It may not be impossible to come up with a generally applicable scheme of quantifying "semantic meaning" that isn't arbitrary and mathematically useless, but people have tried hard, and so far not had much luck. Without any real ability to quantify "functional information", it is difficult to show that it is lost or gained in various processes, and no way to show any basis for a "Law of Conservation of Information".

For a simple example, take a six-digit keycode, like "375719", for a digital door lock to a room. It might seem obviously true that keycode has some "functional information" -- but if somebody changes the lock combination, it clearly doesn't any more, it's just a meaningless string of numbers. Even if the keycode is functional, it doesn't say anything about what's in the room; there might be nothing in there, and no reason to care about the room. The meaning of the keycode is defined by context, and changing the context changes or destroys the meaning.

For a subtler example, it might seem obviously true that the instruction sheet for putting together a model kit with a hundred parts contains more "functional information" than one for putting together a kit with ten parts. We can easily say that we should end up longer instructions, say ten times longer, for the more complicated kit, and in fact we could specify the "functional information" in the instructions for model kits as, say, the number of individual panels in the instructions.

However, the number of panels on the instruction sheet is just an ad hoc statistic of interest only to model builders -- and even at that, it doesn't say anything about the relative elaboration of the individual panels or the clarity of the instructions. Does a brief instruction sheet that's clear have more or less "functional information" than a long instruction sheet that's incomprehensible? There's nothing in the number of panels to permit comparison to anything but the instructions for another model kit, much less anything that could be used in some fundamental rule of information theory. The only thing we can say for certain is that one's bigger than the other; there's no mathematically useful way to say that one has more "functional information" than the other.

The same observations apply to other contexts. What sensible, broadly applicable scheme could be implemented to quantify the meaning of an image? Would a picture of the Mona Lisa get a high value and a pornographic cartoon get a low value? What about the "functional information" in of a book? Who hasn't read a book that talks a lot and says nothing? Would a book of fiction get a lower grade than a book of non-fiction? Does a really concise short book of non-fiction have less or more "functional information" than a long unreadable one?

Again, we can easily come up with any number of ad hoc measures of "functional information", but they buy very little. We could say, for example, that the recipe for making a cake defines the "functional information" in the cake -- but who can say that a cake with a more elaborate recipe is necessarily a better cake than a simpler one? More importantly, in what way would this exercise leaves us a bit wiser than we were from the simple realization that we need a recipe to bake a cake? Recipes certainly don't provide any useful way to quantify the "functional information" of a cake, and few would think the idea of a "Law of Conservation of Recipes" as anything but silly.

This difficulty in establishing the notion of "functional information" can be particularly well illustrated by asking the question: "How can we quantify the amount of functional information in an arbitrary computer program?"

It seems intuitively obvious that there is a certain amount of "functional information" in a computer program -- and if functional information can be calculated for anything, there should be some way to calculate it for a computer program. Just as with the model kit instructions, it seems apparent that the more complicated the task being performed by the program, the more functional information there is in the program.

However, once again this is very difficult to nail down. One problem is there's no known general way to assign a value to the functionality being implemented. We can certainly compare programs that, say, perform the same mathematical function to show which program is more compact and works faster, but how could we compare two programs that perform entirely different tasks? How could we assign values to the functionality implemented?

Even on an informal basis, it's very hard to determine any general relationship between the size of a program and what it accomplishes. A program that draws a real-world object of any particular elaboration tends to be long-winded, but elaborate iterative "fractal" patterns can be drawn by very simple programs, and the detail of elaboration of the fractal pattern can be multiplied by changing the number of iterations, without changing the size of the program in the slightest. Just adding random factors to the fractal program can increase the elaboration of the pattern still further -- and since a program with such random factors will generate different output with each run, its output features open-ended variety. How could we factor an open-ended range of detail into assigning a value to the functional output?

To make matters worse, some programmers can write more compact code than others, and different computer languages and algorithms may be able to do a particular job much more easily than others. To be sure, computer scientists can perform analyses on the "efficiency of algorithms", showing that some algorithms can perform a given task more efficiently than others -- but nobody performing such work would claim that implied an ability to do anything more than that, and nobody sensibly claims they can quantify the "functional information" in a computer program. Programmers tend to use "lines of code" as a measure of the size of a program, but this is much the same as the number of panels in model kit instructions: it says how big and cumbersome the program is, and roughly measures the amount of labor needed to write it, but it says little about how much it actually does or how well it does it.

BACK_TO_TOP* It's tricky enough to define the "functional information" in a computer program; trying to do it for anything that doesn't have a digital basis is dubious -- for example, what is the "functional information" in an elaborate clockwork device? To try to usefully define "functional information" for a biosystem that has only the remotest resemblance to digital machinery is hopeless.

Creationists sound confident when they speak of "functional information" or "complex specified information" or "biological information" or "complexity", but when asked to determine the functional information of a computer program, the result is an irritated stare, followed by evasions. The assertion of creationists that complex systems -- all complex systems, organisms, computer programs, books, Paley's watch -- have some measurable physical attribute named "functional information" or "complex specified information" remains nonsensical, attempts to define such an attribute ending up being ad hoc measures at best.

Going back to the comparison of the genome with a snowflake, the notion that the genome has "functional information" seems plausible at first. On the basis of an analogy with a computer program, the genome clearly has "instructions" that represent "functional information", and it's certainly possible to come up with ad hoc measures of the genome's "functional information". Unfortunately for such an argument, ad hoc is all such measures are. In terms of making a fundamental distinction between life and non-life, all such talk of "functional information" is irrelevant, the exact same laws of physics and chemistry still applying in both cases.

Stripped of its layers of misdirection, the argument being advanced by creationists is that, by comparison with human-made computer programs, the genome had to have been produced by some evasively-defined Cosmic Craftsman, a "Programmer". The genome certainly does look something like a program with lists of instructions, but this concept is nothing new, it's just part of the definition of "heredity". We know that heredity is one of the basic features of life, not seen in non-life; we know that heredity is defined by the DNA sequences of the genome. However, if we then go further and starting talking about the "information" in the genome, we know nothing that we didn't before.

All the discussion of "information" amounts to is ham-fisted reasoning by analogy, arguing that since programs -- or recipes or any other human-defined specification -- are designed by craftsmen, the genome must be designed by a Cosmic Craftsman. This is very much the same as saying the eye must be an Artifact because it looks like a camera, or a bird must be an Artifact because it looks like an airplane, or a pig must be an Artifact because it looks like a piggy bank -- without providing any further reason to believe they are the work of a Craftsman in any of these cases. In the end, the attempt to define a strong concept of "information" that distinguishes life from non-life ends up suspiciously similar in its fuzzy appearance to a re-invention of "elan vital".

BACK_TO_TOP* Before proceeding to conclusions about information theory and evolution, it is worthwhile to consider a few "fine print" issues.

FIRST: The discussion of information content so far has used compression of text files and bitmap image files as examples. Of course there are plenty of other examples, but a little examination shows they don't really bring much new to the party. For instance, consider the sort of imagery drawn by an action computer game. Such imagery generally consists of sets of lines (vectors), or more generally speaking polygons built up from vectors, used to construct "models" of objects in the game -- buildings, weapons, vehicles, characters. Those who work with such imagery actually do have an index of the complexity of the models: the number of vectors, or more usually polygons, in the model.

However, the number of polygons is yet another ad hoc measure, only useful for comparing one model to another, with little applicability to comparisons with anything else -- in fact, the number of polygons really doesn't do much more than provide an indication of how much computing power is required to generate the model. The model amounts to just another data file, and once again the amount of information in it is, as far as information theory is concerned, effectively the number of bytes in the file after compression. We get the same results for any other sort of file, say a MIDI music file -- we might some sort of ad hoc measure of information for the contents of any particular type of file, say the number of musical notes, but once again as far as information theory is concerned, the amount of information is the number of bytes in the compressed file.

* SECOND: Since information theory can only really address the quantity of information in a file and not the meaning of the information, any linkage between the nature of the data in of one file and the data in another file is, as far as information theory is concerned, largely meaningless. Suppose we have a text file written in English; and suppose we have the equivalent text in a second file, but written in Chinese. Can we say the two files have the same information? Intuitively, we might say YES, but as far as information theory is concerned, the question doesn't even make sense. Since information theory isn't concerned with meaning, the only useful comparison that can be made between the two files is their compressed size in bytes.

Similarly, suppose we have a PNG bitmap image file, and then make a copy shrunk down in size by half. Do the original and shrunken files contain the same information? Again, that doesn't make sense, the shrunken file contains fewer bytes, and by that alone has less information than the original; if we doubled the size of the original file, it would have more information than the original.

The same goes for any case where two different files contain alternate representations of the same message. We could, for example, list a small text file on a PC display and save the text as a bitmap file; or we could similarly display a computer model and save the image of the model as a bitmap file. In either case, do we have the same information in each pair of files? Once again, the question is "not even wrong", it just makes no sense. In each case, the two files are different messages that happen to describe the same item. After compression, the two files take up a certain amount of space on a hard disk, and that's all that information theory really says about the matter. The fact that the contents of the two files have some sort of logical connection is, as far as information theory is concerned, mostly irrelevant.

* THIRD: The argument expressed here has assumed "lossless" compression, meaning that the compression doesn't lose any information. There's "lossy" methods that can obtain much higher compressions, but they do so by degrading the information, reducing the "noisiness" of the data: it's cheating, like trimming off parts of something so it fits into a cramped box. Any level of compression can be obtained as desired, but as the compression ratio is increased, the data becomes more and more degraded until it's completely useless.

Lossy compression works okay for things like photographic images, video, and audio, but not for things like text files -- nobody wants any part of their message to be thrown out, since losing even a single letter might render the message misleading or incomprehensible. In terms of mainstream information theory, lossless compression is more or less a red herring: it implies no real change in the argument.

* FOURTH: There have also been attempts to undermine the idea that a file containing random strings like "XFNO2ZPAQB4Y" has high information content by pointing out that it would be very simple to create an algorithm to generate as many random strings as one likes, and so such a file actually has low information content. However, that's bogus, claiming in effect that one file full of gibberish text is equivalent to another, that they are exactly the same message. That may be true from a reader's point of view, but the problem is that this is sneaking in a consideration of "meaning" (or the lack thereof) that information theory can't say anything about.

The objective is in effect to compress a file, and then decompress it exactly as it was before. The compression algorithm will get a text file and will compress it without concern for whether it contains readable text or sheer gibberish. The decompression will restore the text file, providing an exact duplicate, letter for letter, of the original. If the file contained what appeared to be sheer gibberish that was, unknown to everyone except the person sending it and the person receiving it, actually an encrypted message, simply providing another file of entirely different gibberish generated at random would be useless, throwing the encrypted message in the dumper. Even from a reader's point of view, one file full of gibberish text is not necessarily equivalent to another.

Carrying this thought a bit further, from the point of view of cryptography, a file full of purely random characters that everyone else would judge as sheer junk is crammed full of useful information, since it can be used as an encryption key, in effect a set of instructions on how to perform a scrambling of a "real" message. The more random and incomprehensible the file, the more impenetrable and useful an encryption key it is. Every such file of random characters is unique information: a codebreaker has to have the specific encryption key to crack the message. Attempting to decrypt using a different encryption key will produce gibberish for an output.

If a creationist is unimpressed by this reasoning and continues to insist that a file of gibberish characters is still gibberish, then we can ask:

That leads straight back to the issue of "functional information", showing how fuzzy it is. As Shannon made it clear, the only difference between message and noise is that one is wanted, the other not; there's nothing in the information itself that inherently defines it as message or noise, "functional" or "nonfunctional", "specified" or "unspecified". Functional information can only be defined in terms of a given context. Without that specific context, there's no way to define "functional information", much less to quantify it in a useful fashion. There's no way to show that any generalized notions of "functional information" have any serious utility.

* FIFTH: Creationists, for whatever reasons, like to proclaim that "information is immaterial" or "information is non-physical". It is not clear what the point is supposed to be; it seems to be an attempt to establish a mystical connection to information theory, or maybe it's just another creationist exercise in muddying the waters. It's nonsense, in any case. If all physical representations of, say, a particular piece of music -- a written score, digital representations on a computer, the synaptic connections forming the memory of the piece in the brain of a musician -- were erased, that information would cease to exist.

* SIXTH: As an absolutely final consideration, some creationists have attempted to back up their "Law of Conservation of Information" by citations of physicists referring to "information conservation". This is an argument of desperation: all the physicists mean by the phrase is that, in principle if not necessarily in practice, the past history of a physical system can be traced back to all its previous states. That has absolutely nothing to do with the notion that "only an intelligence can create information". The two notions have as much in common with each other as Rome, Italy, has to do with Rome, New York: they have the same name.

BACK_TO_TOP* Having acquired definitions from information theory, we can now return to the supposed "Law of Conservation Of Information" in its various forms. One way that it is expressed is that there is no way for "information" to arise from "randomness". For example, as one creationist put it:

BEGIN_QUOTE:

Information theory states that "information" never arises out of randomness or chance events. Our human experience verifies this every day. How can the origin of the tremendous increase in information from simple organisms up to man be accounted for? Information is always introduced from the outside. It is impossible for natural processes to produce their own actual information, or meaning, which is what evolutionists [sic] claim has happened. Random typing might produce the string "dog", but it only means something to an intelligent observer who has applied a definition to this sequence of letters. The generation of information always requires intelligence, yet evolution claims that no intelligence was involved in the ultimate formation of a human being whose many systems contain vast amounts of information.

END_QUOTE

This statement seems impressively authoritative, but it was just pulled out of the air. If random changes are introduced into a string, the information content is likely to keep getting bigger and bigger. Suppose a data file gets corrupted; does it gain or lose information? It actually gains information by adding randomness to the string -- "GGdGXXTTTyT" has more information than "GGGGXXTTTTT" because it can't be compressed as much. As far as information theory is concerned, information absolutely arises out of randomness: the more random the information, the less "air" that can be squeezed out of it.

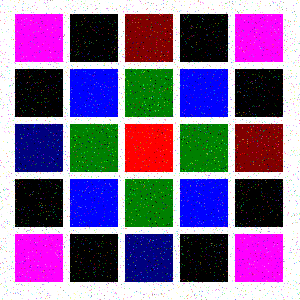

To the general public, the idea that information theory says that random changes introduce information may seem counter-intuitive, since most people would think that random changes would amount to "noise" that would corrupt "information". For example, imagine the simple structured image file used as an example in the previous chapter; if it became corrupted, say with little colored speckles randomly sprinkled over the image:

-- it would seem plausible to say that there had been a loss of information. However, once again, the problem is that "meaning", in this case the picture contained in the file, isn't the issue addressed by contemporary information theory. The speckles mean more information to compress, with the compression algorithm needing to record where the speckles are so they can be reconstructed later, and so the compression ratio will not be as great. While the original file compressed to 1.12 KB, a half percent of its uncompressed size, this "degraded" file only compresses down to 47.4 KB, 18% of its uncompressed size. The random changes added information to the file. The fact that they have resulted in a less pleasing image is irrelevant.

A comment along the lines of "information theory states that information never arises out of randomness or chance events" is, again, nonsense; it says no such thing. Indeed, as Chaitin pointed out, generating a large amount of information requires either a very long-winded calculation or a source of randomness. In other words, the complexity of the genome is entirely consistent with its origins in random genetic variation. The attempt by creationists to "move the goalposts" and proclaim they actually mean "functional information" is crippled by the fact that they cannot provide anything resembling a clear definition of the concept, much less show how it supports a "Law of Conservation of Information".

* Another creationist went on in the same vein, attacking the idea that MET could account for, say, the transformation of a hemoglobin gene into an antibody gene:

BEGIN_QUOTE:

There are two fallacies in this argument. The first is that random changes in existing information can create new information. Random changes to a computer program will not make it do more useful things. It doesn't matter if you make all the changes at once, or make one change at a time. It will never happen. Yet an evolutionist [sic] tells us that if one makes random changes to a hemoglobin gene that after many steps it will turn into an antibody gene. That's just plain wrong.

END_QUOTE

Again, the argument is trying to leverage off an implied law of information theory -- "random changes in existing information cannot create new information" -- which is actually a fiction, based on a fuzzy definition of the term "information". Once that red herring is thrown out, the creationist is merely making the classic "monkeys & typewriters" argument against MET, comparing evolution to the idea of monkeys pounding on typewriters and producing Shakespeare: "It doesn't matter if you make all the changes at once, or make one change at a time. It will never happen."

In reality, anybody who thinks MET is about monkeys blindly hammering on typewriters hasn't understood the essential point of the whole concept: the random changes matter a great deal, as long as each change is screened by selection and allowed to accumulate. This particular example makes it plain what creationists are trying to say. In effect, given an image file, they're claiming that random changes of the picture dots are not going to result in a proper image of anything; by implication it requires intelligence to create a useful image.

Random changes by themselves of course won't produce a useful image, but there are two fallacies in this argument:

Although the Law of Conservation of Information is trotted out at regular intervals, it's a zombie -- dead but still walking around, merely used as part of the creationist assertion that mindless evolution cannot account for the complexity of life. In broad terms, as one creationist insisted, MET "neither anticipates nor remembers. It gives no directions and it makes no choices." That is more nonsense:

Creationists still demand to know where the information -- the "instructions", the "functional information" if we have to use that dubious term -- acquired by the "memory" embodied in the genome comes from. Biologist Richard Dawkins provided an eloquent answer:

BEGIN_QUOTE:

If natural selection feeds information into gene pools, what is the information about? It is about how to survive. Strictly it is about how to survive and reproduce, in the conditions that prevailed when previous generations were alive. To the extent that present day conditions are different from ancestral conditions, the ancestral genetic advice will be wrong. In extreme cases, the species may then go extinct.

To the extent that conditions for the present generation are not too different from conditions for past generations, the information fed into present-day genomes from past generations is helpful information. Information from the ancestral past can be seen as a manual for surviving in the present: a family bible of ancestral "advice" on how to survive today. We need only a little poetic license to say that the information fed into modern genomes by natural selection is actually information about ancient environments in which ancestors survived.

END_QUOTE

The instructions in the genome were acquired the hard way, through sheer trial-and-error experience. A jumble of "unspecified information" -- indiscriminately good, bad, or indifferent -- arose through genetic variation, with the "nonfunctional information" tossed out by the Grim Reaper and the "functional information" left behind to act as instructions. The instructions encode the past experience of an organism's ancestors in the form of adaptations, with no foresight to the future. This is essentially just the definition of evolution by natural selection.

Evolution honestly is a design process, uncomprehendingly following a certain set of rules to come up with innovative solutions to the problems of survival and propagation. Much the same evolutionary approach can be and is successfully used in design software that has no more comprehension of what it is doing; incidentally, structural elements designed by such programs have a clear resemblance to structural elements in organisms. Evolutionary designs, although tending towards the ad hoc, may be exquisitely elegant and elaborate; as biologists like to say: "Evolution is cleverer than we are."

Clever yes, but no mind involved. In the present day we have developed very intelligent software and machines that nobody would judge would have a mind, but we still describe them as "smart". Our machines are much more sophisticated than the Reverend Paley's watch, and have gone beyond his simplistic thinking. Creationists insist that the elaborations of organisms prove the existence of a Cosmic Craftsman -- but evolutionary design can do the job, no real question of it, exploiting endless variations of the genome, tirelessly filtering out results that don't make the grade, and literally having all the time in the world.

Richard Dawkins famously said that organisms are "complicated things that give the appearance of having been designed for a purpose." They have been; they were designed by evolution with the goals of survival and reproduction, being tuned to their lifestyles -- bat, dolphin, cheetah, panda, each with its own tools and tricks to get along in the world.

* In any case, information theory poses no real challenge to MET. Despite that, creationists press ahead regardless, hoping to muddy the waters using information-theory arguments characterized by fallacies:

Such exercises amount to starting a conclusion, and then churning out contrived arguments to prop it up. Creationist information theory is a hoax, a verbal smokescreen, the "Law of Conservation of Information" merely being the basic creationist assertion that evolution is impossible -- presented as a law of nature using mathematical window-dressing.

The appeal of the information theory / complexity argument to creationism is that everyone has some intuitive concept of the idea of "information", and it's no real effort to come up with plausible-sounding arguments whose lack of substance can be easily concealed in a fog of bafflegab that sounds sophisticated to the naive. The reality is that the creationist information theory attack on MET is nothing more than meaningless doubletalk, intended to confuse. It has no scientific credibility. Information theory may have some useful application in the biological sciences, but MET neither stands nor falls on it.

BACK_TO_TOP